The LiDAR sensor, which recognizes objects by projecting light onto them, functions as eyes for autonomous vehicles by helping to identify the distance to surrounding objects and speed or direction of the vehicle. To detect unpredictable conditions on the road and nimbly respond, the sensor must perceive the sides and rear as well as the front of the vehicle. However, it has been impossible to observe the front and rear of the vehicle simultaneously because a rotating LiDAR sensor was used.

To overcome this issue, a research team led by Professor Junsuk Rho (Department of Mechanical Engineering and Department of Chemical Engineering) and Ph.D. candidates Gyeongtae Kim, Yeseul Kim, and Jooyeong Yun (Department of Mechanical Engineering) from POSTECH has developed a fixed LiDAR sensor that has a 360° view, in collaboration with Professor Inki Kim (Department of Biophysics) from Sungkyunkwan University.

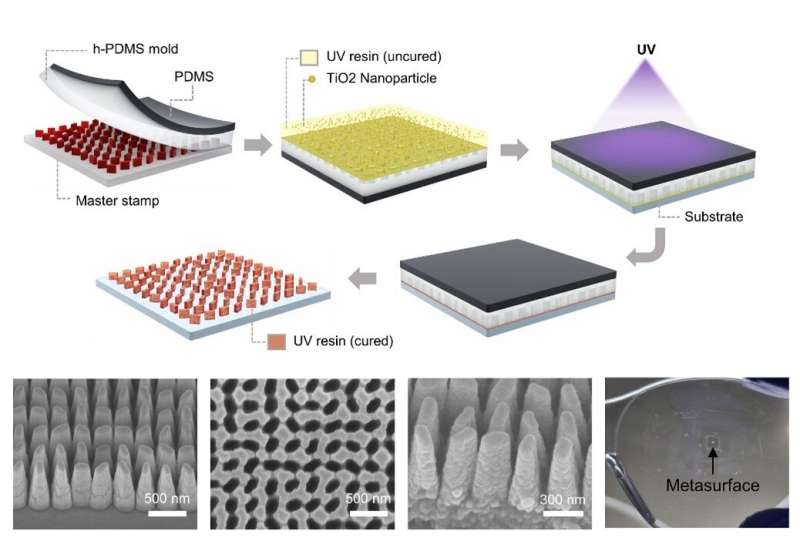

This new sensor is drawing attention as an original technology that can enable an ultra-small LiDAR sensor since it is made from the metasurface, which is an ultra-thin flat optical device that is only one-thousandth the thickness of a human hair strand.

Using the metasurface can greatly expand the viewing angle of the LiDAR to recognize objects three-dimensionally. The research team succeeded in extending the viewing angle of the LiDAR sensor to 360° by modifying the design and periodically arranging the nanostructures that make up the metasurface.

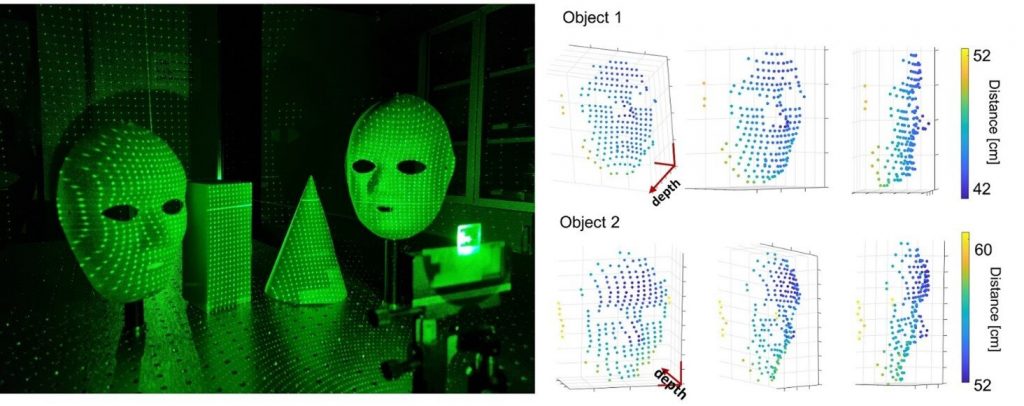

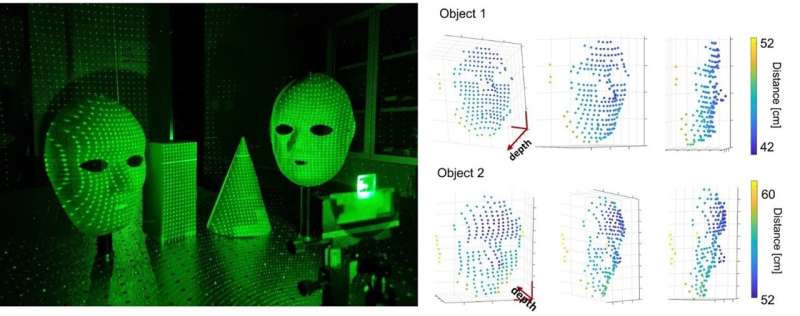

It is possible to extract three-dimensional information of objects in 360° regions by scattering more than 10,000 dot array (light) from the metasurface to objects and photographing the irradiated point pattern with a camera.

This type of LiDAR sensor is used for the iPhone face recognition function (Face ID). The iPhone uses a dot projector device to create the point sets but has several limitations; the uniformity and viewing angle of the point pattern are limited, and the size of the device is large.

The study is significant in that the technology that allows cell phones, augmented and virtual reality (AR/VR) glasses, and unmanned robots to recognize the 3D information of the surrounding environment is fabricated with nano-optical elements. By utilizing nanoimprint technology, it is easy to print the new device on various curved surfaces, such as glasses or flexible substrates, which enables applications to AR glasses, known as the core technology of future displays.

Professor Junsuk Rho explained that they “have proved that we can control the propagation of light in all angles by developing a technology more advanced than the conventional metasurface devices.”

He added that “this will be an original technology that will enable an ultra-small and full-space 3D imaging sensor platform.”

The research was published in Nature Communications.